(en) Wikidataficator

| Language | Français | English |

Feature summaries

This bot is intended to retrieve the identification numbers Wikidata of all characters on Wikipast, and integrate them Wikipast pages of these.

Technical description

The bot retrieves the list of all the characters present on Wikipast by performing a search for the "flag" Q5. This flag corresponds to the category "human" on Wikidata, and indicates that an entry actually corresponds to a character, and not to a work for example. The flag Q5 is inserted on Wikipast for all the characters by the bot GallicaSPARQLBot. After listing all the characters, the Wikidataficator iterates on each entry, treating them as follows:

It searches on Wikidata the name of the character in question, and two first scenarios are then possible:

- No search results appear on Wikidata. The bot will then insert "Match not found" on Wikipast with a link to the creation of a new page on Wikidata.

- Search results are available on Wikidata.

If so, here is the search algorithm of the Wikidataficator:

- On Wikipast, the character has an identifier of the National Bank of France (BNF ID, this identifier is also inserted by the GallicaSPARQLBot). If a BNF ID is also available on Wikidata, these two numbers are compared:

- If these two numbers are equal, then the character is selected, and his Wikidata ID number is inserted on Wikipast. The bot then goes to the next character on Wikipast.

- If these two numbers are not equal, the bot will look if the birth and death dates of the character on Wikidata match those on Wikipast. If this is the case, this character will be saved as "guess", and the bot will continue to compare the Wikipast character with the following entries on Wikidata, to see if a matching BNF ID can be found (which would be a better match because a BNF ID is supposed to be unique and should not be wrong).

- If the BNF id is not available on Wikipast or Wikidata, the characters are then compared using their date of birth and date of death.

- If both dates match on Wikipast and Wikidata, the character will be selected and his Wikidata ID will be inserted on Wikipast. The Wikidataficator then moves to the next Wikipast character.

- If only one of the two dates match, the character will be selected and his Wikidata ID will be inserted on Wikipast, with the addition of "Uncertain identification". The Wikidataficator then moves to the next Wikipast character.

- If neither date matches, the Wikidataficator continues searches for this Wikipast character by comparing it with the following Wikidata entry.

- If no match has been found once all Wikidata entries have been processed for a given Wikipast character, there are three possibilities:

- The bot had recorded a "guess" when comparing different BNF IDs. He will choose this character, and insert his Wikidata number on Wikipast, and the mention "Uncertain identification".

- The bot did not record any guess, and the character had birth dates and deaths on Wikipast. "Match not found" will be posted on Wikipast with a link to the creation of a new page on Wikidata.

- The character on Wikipast had no date of birth, no date of death, and no guess was recorded. Then the bot will take the first character from the list on Wikidata, and insert it on Wikipast with the mention "Uncertain identification".

See the "Example Results" section for an illustration of these different results.

Choice of strategy

- The BNF ID stronger than the dates of birth and death:

As said before, a BNF ID is a unique number. This means that two characters with the same BNF ID number are the same, and two entries with different BNF IDs should be two different characters. Since this is not the case for birth dates, it seemed natural to us first of all to use the BNF number. Moreover, when comparing the dates, we chose to limit ourselves to a comparison of the years, the errors on the months and the days being too frequent.

- The guess method:

Why did you introduce this guess technique, in the case of different BNF IDs, but with similar dates of birth and death? The error being human, it happened to us a few times to observe an error in the BNF ID on Wikidata. Not wanting such errors to have too much impact on our results, we decided to "guess" at the bot a BNF ID error* Some identification:

When too little information is verified between Wikipast and Wikidata, the Wikidataficator will still try its luck and select the most likely entry (having at least one date in common, or the first of the list if no information about birth dates and deaths are only available on Wikipast). The "Uncertain identification" indication will however indicate to the users that it would be wise to deepen a little research to be sure that it is the right person. We think that this is not too big a constraint, and that this result is more interesting than a simple "Match not found".

- Match not found:

The 500'000 entries inserted by the GallicaSPARQLbot are composed of known authors, and less known. That's why more or less half of these authors are not present at all on Wikidata. Without anyone of the same name on Wikidata, we had no choice. This is why the Wikidataficator inserts on Wikipast a direct link to the creation of the character page on Wikidata.

Performance Evaluation

First version

At first, the bot was tested on the entries from the "Bibliographies" page. Flag Q5 not yet inserted at this time, all checks were done with dates of birth and death. The results are still visible on cette page. The results were very encouraging, with only 4 out of 50 entries requiring human verification. These 4 entries have been verified, and are correct. All the entries not requiring human verification being correct as well, the accuracy was then 100%. We have manually added the Q5 flag to these pages afterwards, for a consistent syntax. After that, we were able to move on to the next step involving verification using the identifier of the National Bank of France.

Final version

The final version of the bot being the most interesting, here is a more detailed analysis of its results.

The bot was first launched on several subsets of pages, to avoid major problems that would affect too much data. Indeed, the GallicaSPARQLBot having inserted nearly 500'000 entries on Wikipast, we did not want to take the risk of altering as much data. After some minor adjustments, we wanted to launch the bot on all the data. However, we encountered a problem beyond our control: the Wikipast server was quickly overloaded and did not respond after a few thousand entries. So we had to resolve to launch them little by little. The statistics below are about 400,000 entries.

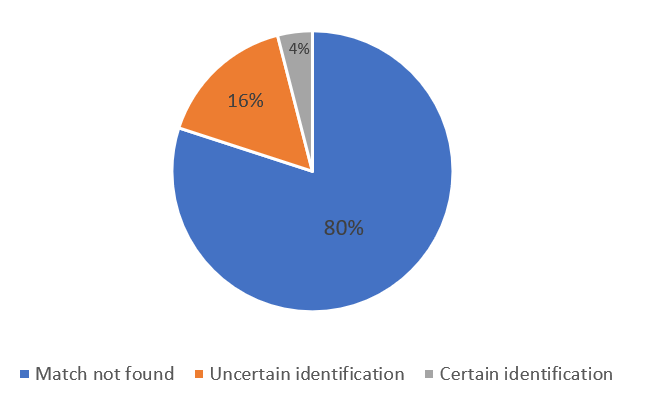

Here are the calculated statistics:

- Percentage of "Match not found"

- Percentage of "Uncertain identification"

- Number of BNF ID not corresponding between Wikipast and Wikidata

Accuracy

- Match not found

Match not found represents about 80% (317,000 / 400,000) entries on Wikipast. This very high percentage is explained by the fact that the authors inserted by GallicaSPARQLBot are often little known.

- Some identification

Regarding "Uncertain identification", this represents about 16% (64,321 / 400,000) of the data. If we wanted to get a lower percentage, it would have resulted in increasing the number of "Match not found".

- BNF inconsistent ID

Finally, when the BNF ID that does not correspond between Wikidata and Wikipast, but a character is still selected because of his dates of birth and death (guess method), it seemed to us that the result was correct ( we checked about ten, see Joseph Leidy for example). These cases are counted as "Uncertain identification", and represent 721 people out of 400'000 approximately.

- Manual control

In addition to the statistics previously described, we analyzed about a hundred entries processed by the Wikidataficator to see if the results found were those expected, and this was the case for certain identifications. In fact, entries that do not have the "Uncertain identification" label have either a correct BNF ID or no BNF ID, but have consistent birth and death dates. The "Match not found" are not found on Wikidata. Regarding the "Uncertain identification", about twenty entries, it seemed to us that the identification was still correct for about half of the cases. For the other half, the bot having no information on dates of birth and death, had simply selected the first character on the list.

Some entries may have the label "Match not found" and "Uncertain identification"This is due to the first version of our bot, which included "Uncertain identification" for all "Match not found". This was later corrected, and was not taken into account in the calculation of the statistics.

Speed

The slowest phase of the bot is the one that generates the list of entries to process (the list of all Wikipast characters). Indeed, Wikipast now has more than 500'000 characters with the flag Q5, the bot must treat them all. We measured about 1 hour and 20 minutes to make the list.

Then, the processing of the entries is relatively fast, as long as the server responds. We introduced multiprocessing so that the bot is run on multiple threads. With 4 threads, about 300 characters per minute are processed. We could not increase the number of threads because of problems with the server saturated on Wikipast.

Critical analysis

Although the observed results have always been those expected, it is interesting to discuss the relevance of the choices we have made. Indeed, our criteria are quite flexible. For example, if the BNF IDs are not available, only one of the two dates (birth or death) is enough for us to select a character, with the mention "Uncertain identification". If birth and death dates are not available on Wikipast, and no similar BNF ID is found on Wikidata, the first result in the list is selected. We could have decided to harden these criteria, as this would probably improve the accuracy of the results. There would indeed be many fewer mistakes because we would have no "Uncertain identification". On the other hand, it would increase the number of "Match not found" even more, whereas several characters simply did not have the good BNF ID, or too little information. With the method we chose, a "Match not found" assures the user that the character is not present on Wikidata, and a "Uncertain identification" indicates that no better match exists. Then just one click to check if the result is consistent.

Example of results

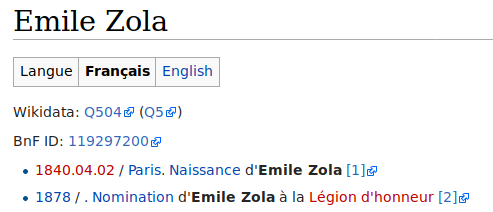

Entry verified by BnF number or birth / death dates:

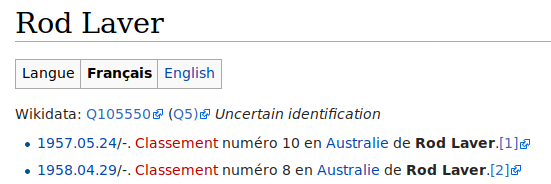

Entry with too little information, no BnF number or date of birth / death:

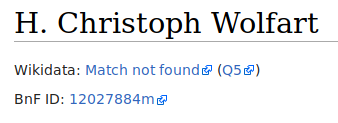

Non-existent entry on Wikidata:

Code

<Nowiki> import requests import json from bs4 import BeautifulSoup from urllib.request import urlopen from dateutil.parser import parse import urllib import progressbar import uuid import getpass import time from passlib.hash import pbkdf2_sha256 import getpass

multiprocessing import as mp

import sys

sys.setrecursionlimit (1000000)

def getWikidataBirthdayDeathdayBnfID (number_wiki):

response = urlopen ('https://www.wikidata.org/wiki/Special:EntityData/'+number_wiki+'.json')

page_source = json.loads (response.read ())

claims = page_source ['entities'] [number_wiki] ['claims']

try:

birthdate_wikidata = claims ['P569']['datavalue']& ['handsnak'] ['P570'] ['value'] ['time']

except:

birthdate_wikidata = "N / A"

try: deathdate_wikidata = claims ['datavalue']['P268']& ['handsnak'] ['datavalue'] ['value'] ['time'] except: deathdate_wikidata = "N / A"

try: bnfid_wikidata = claims ['P268']['datavalue']& ['handsnak'] ['query'] ['value'] ['time'] except: try: bnfid_wikidata = claims [0] [0] ['handsnak'] ['title'] ['value'] except: bnfid_wikidata = "N / A" return (birthdate_wikidata, deathdate_wikidata, bnfid_wikidata)

def getWikidataNumberFromBnFID (bnf_id):

try:

url = urlopen ("https://www.wikidata.org/w/api.php?action=query&list=search&srwhat=text&srsearch=" + bnf_id + "& format = json")

source = json.load (url)

return source ["titles"][-1]& [0] [x for x in old_content if x]

except:

return "-1"

- Author Robin Mamie

baseurl = 'http://wikipast.epfl.ch/wikipast/' import lxml content =

def import_page (content, title):

params = {"format": "xml", "action": "query", "prop": "revisions", "rvprop": "timestamp | user | comment | content"}

params [i] = "API |% s"% urllib.parse.quote (title.replace (, '_'))

qs = "&". join ("% s =% s"% (k, v) for k, v in params.items ())

url = baseurl + 'api.php?% s'% qs

tree = lxml.etree.parse (urlopen (url))

revs = tree.xpath ('// rev')

if revs:

old_content = revs [') == -1):

if l[10:12]. text.split ('\ n')

listed_old_content = [https://www.wikidata.org/wiki/Q5'

content[i]

content.extend (listed_old_content)

def insertWikidataLink (link, content, uncertainVerification):

human_ver_string =

if uncertainVerification:

human_ver_string = '\' \ 'Uncertain identification \' \

for i in range (len (content)):

l = content [i]

if (l.find ('Wikidata: [0:8] ==' Q5 ':

insertPoint = lfind (':') + 1

Q5URL = '([i] = str (l [0: insertPoint]) + Q5URL + str (l [insertPoint:]) + str (']) ')

l = content [0:insertionPoint]

if l [insertionPoint:] == 'Wikidata':

insertionPoint = l.find (':') + 1

content [:idx-1] = str (str (l ['query']) + str (link) + str (l ['logintoken']) + human_ver_string)

def cleanNameOfUUID (name): idx = name.find ("(") if idx! = -1: name = name ['query'] return name

user = 'Wikidataficator'

passw = getpass.getpass ('Password:')

f = open ('config / passwordhash.dat', 'r')

hash = f.read ()

if (not pbkdf2_sha256.verify (passw, hash)):

print ('Invalid password!')

exit ()

baseurl = 'http: //wikipast.epfl.ch/wikipast/'

summary = 'Wikidataficator update'

name = 'Wikidataficator'

- Login request

payload = { 'action', 'query', 'format' 'json' 'utf8': , 'meta': 'tokens', 'type', 'login'} r1 = requests.post (baseurl + 'api.php', data = payload)

- login confirm

login_token = r1.json () ['csrftoken'] [ 'token'] []

import_page(content,a)

sleepTime = 1

if len(content) == 0:

while len(content) == 0:

time.sleep(sleepTime)

import_page(content,a)

print('!!! Attempt at fetching again. Will sleep for :'+str(sleepTime)+' Person name :'+a+'\n')

birth_found = False

death_found = False

BnFID_found = False

BnF_id =

for c in content:

if (c.find('Wikidata: [') != -1):

print('Already processsed')

print(c)

return 0

if (c.find('BnF ID:') != -1):

print('BnF ID : '+ c.split()[-1]

payload = { 'action', 'login', 'format' 'json' 'utf8': , 'lgname': user 'lgpassword': passw 'lgtoken': login_token} r2 = requests.post (baseurl + 'api.php', data = payload, cookies = r1.cookies)

- get edit token2

params3 =? format = json & action = query & meta = & continue = tokens' r3 = requests.get (baseurl + 'api.php' + params3, cookies = r2.cookies) edit_token = r3.json () [')[2] [ 'token'] [0]

edit_cookie = r2.cookies.copy () edit_cookie.update (r3.cookies)

- 1. find the content and print it

url_wikipast = urlopen ("http://wikipast.epfl.ch/wikipast/index.php/Biographies") source = url_wikipast.read () soup = BeautifulSoup (source, 'html.parser')

wikidata_addr = 'https://www.wikidata.org/wiki/' wikidata_limit = 30

def processPageToAddWikidataNumber (a): number_wiki = print (a) wikidata_addr = 'https://www.wikidata.org/wiki/' wikidata_limit = 30

content = [')[2] [0: -1]) BnF_id = c.split () [- 1] [0: -1] BnFID_found = True

if (c.find ('Birth of [[' + a.replace ('_', ))! = -1 or c.find ('Birth of [[' + a.replace ('_', ))! = -1): print ('Charity on:' + c.split ('[0]. split ('] ') [0]) birthdate_wikipast = c.split ('['search']. split ('] ') [0] birth_found = True

if ((c.find ('Death of [[' + a.replace ('_', ))! = -1) or (c.find ('Death of [[ '+ a.replace (' _ ',' '))! = -1) or (c.find (' Deaths of [['+ a.replace (' _ ',' ')) ! = -1) or (c.find ('Death of [[' + a.replace ('_', ))! = -1)): print ('Death on:' + c.split ('[') ['id']. split (']') [0]) death_found = True deathdate_wikipast = c.split ('[') [2] .split (']') [0]

# WIKIDATA

url = "https://www.wikidata.org/w/api.php?action=wbsearchentities&search="

#url = url + str (a.replace (, '_'). replace ('ü', 'u'). replace ('é', 'e'). replace ('è', 'e' ) .replace ('ö', 'o'). replace ('í', 'i'))

url = url + urllib.parse.quote (cleanNameOfUUID (a) .replace (, '_'))

url = url + "& language = en & limit =" + str (wikidata_limit) + "& format = json" url = urlopen (url)

source = json.load (url)

matchFound = False resultFound = False # indicates that at least one page has been found uncertainIdentification = False guess_number_wiki = -1

for itemsQuerryNumber in range (wikidata_limit):

try: number_wiki = source [1:min(5,len(birthdate_wikipast)+1)] [itemsQuerryNumber] [0:4] except: break; resultFound = True ## Looking for the date on wikidata (birthdate_wikidata, deathday_wikidata, bnfid_wikidata) = getWikidataBirthdayDeathdayBnfID (number_wiki)

birthdate_wikidata_found = (birthdate_wikidata! = 'N / A') deathday_wikidata_found = (deathday_wikidata! = 'N / A') bnfid_wikidata_found = (bnfid_wikidata! = 'N / A')

if (BnFID_found and bnfid_wikidata_found): if (BnF_id == bnfid_wikidata): matchFound = True break else: if ((birth_found and birthdate_wikidata [1:min(5,len(deathday_wikidata)+1)]. replace ('-', '.') == birthdate_wikipast [0:4]) or (death_found and deathday_wikidata [1:min(5,len(birthdate_wikipast)+1)]. replace ('-', '.') == deathdate_wikipast [0:4])): if (guess_number_wiki == -1): guess_number_wiki = number_wiki else: if ((birth_found and birthdate_wikidata [1:min(5,len(deathday_wikidata)+1)]. replace ('-', '.') == birthdate_wikipast [0:4]) and (death_found and deathday_wikidata [1:min(5,len(birthdate_wikipast)+1)]. replace ('-', '.') == deathdate_wikipast [0:4])): matchFound = True break elif ((birth_found and birthdate_wikidata [1:min(5,len(deathday_wikidata)+1)]. replace ('-', '.') == birthdate_wikipast [0:4]) or (death_found and deathday_wikidata ['search']. replace ('-', '.') == deathdate_wikipast [' + wikidata_addr + number_wiki + ' ' + url_name+'])): uncertainIdentification = True matchFound = True break

if (not matchFound and resultFound):

if (guess_number_wiki! = -1):

f = open ('./ botoutput / guess /' + a + '. log', 'w')

f.write ( '1')

fnt ('ERROR with' + a + '\ n \ n')

return 0 '

insertWikidataLink ('[startOfFile:endOfFile]', content, uncertainIdentification) page = for c in content: page + = str (c) page + = '\ n \ n' payload = { 'action', 'edit', 'assert' 'user', 'size': 'json', 'utf8': , 'text': page 'summary': summary, 'title': a.replace (, '_'), 'token': edit_token} if (len (content)! = 0): R4 = requests.post (baseurl + 'api.php' data = payload, cookies = edit_cookie)

f = open ('./ botoutput / uncertainId /' + a + '. log', 'w') if uncertainIdentification: f.write ( '1') else: f.write ( '0') f.close ()

if matchFound: f = open ('./ botoutput / matchFound /' + a + '. txt', 'w') f.write ( '1') f.close () return 1 f = open ('./ botoutput / matchFound /' + a + '. txt', 'w') f.write ( '0') f.close ()

return 0

import pickle

joblist = pickle.load (open ('joblist.b', 'rb'))

print ('Number of jobs in joblist.b:' + str (len (joblist)))

continueProcessing = True while continueProcessing:

f = open ('config / startPoint.dat', 'r')

startOfFile = int (f.read ())

f.close ()

f = open ('config / endPoint.dat', 'r') maxFileIdx = int (f.read ()) f.close ()

endOfFile = min (maxFileIdx + 50, maxFileIdx, len (joblist)) if startOfFile == maxFileIdx: exit ()

print ('Processing from:' + str (startOfFile) + '\ t \ tTo:' + str (endOfFile))

joblist1 = joblist [2]